Core Services

Reflections on trust, tools and the reality of automation

This blogpost was written by Jon Cumiskey, Head of Information Security. The views expressed are personal and do not necessarily align with the views of SEP2.

As someone who has been inherently sceptical of AI in the past (“it’s just a bunch of IF statements, it’s just a marketing buzzword”) seeing the rise of chatbots, fake-imagery and hallucinations has been an interesting one for me. What I am writing here is an opinion from a particular vantage point: at SEP2 we want to help as many organisations be as secure as possible, which is a vantage point that I hope is common enough to be relatable.

It is interesting to see the shifting sands in this part of the technological world and get a good view of what benefits and challenges are emerging, especially when viewed through our security lens. This blogpost is not meant to be over-critical to any specific vendors and is merely a way of addressing one point of view in a snapshot of time.

I was in a meeting with our CEO Paul Starr and a customer a few weeks back where Paul decided to try Microsoft Copilot out for summarising the meeting notes for us. We were discussing malware protection and compensating controls in Google Cloud with the customer at the time. Copilot succinctly summarised the point as “We will install the Google Malware”, missing out some key parts of the sentence. This type of stuff is fun to laugh at, and then manually correct, until it makes a big enough error, or until it gets smart enough to grow tired of our nit-picking and berating and puts us out to pasture (aka. Minimum Basic Income). Aside from that, it did a good job in summarising a 2 hour discussion into a couple of sentences.

One of the older idioms that really stuck with me from the Machine Learning days is that a key challenge was “understandability” (i.e. why the model made the decision that it made). The amount of utility that a tool can have relies on scrutiny, being able to understand its reasoning and knowing the level of fallibility and/or trustworthiness it has. This almost sounds like working with people. While that sounds incredibly obvious, the horror stories of people mis-judging that trust level will no doubt continue.

Gemini

At SEP2, we’ve been getting a view of the publicly available AI-powered functionality from Google’s Gemini model (formerly known as Duet) through the Chronicle SIEM/SOAR platform on the SecOps Enterprise packages. While this platform has cut down our engineering time significantly and given us a scalable platform to thrive on, I want to know how we can deliver more to our customers. Google are releasing more features and capabilities soon for this too and anyone who was at Google Next knows that they are a little bit excited about this particular topic.

At current, Gemini exists in two places in Chronicle – a SIEM Search Helper and a SOAR AI Investigation Widget.

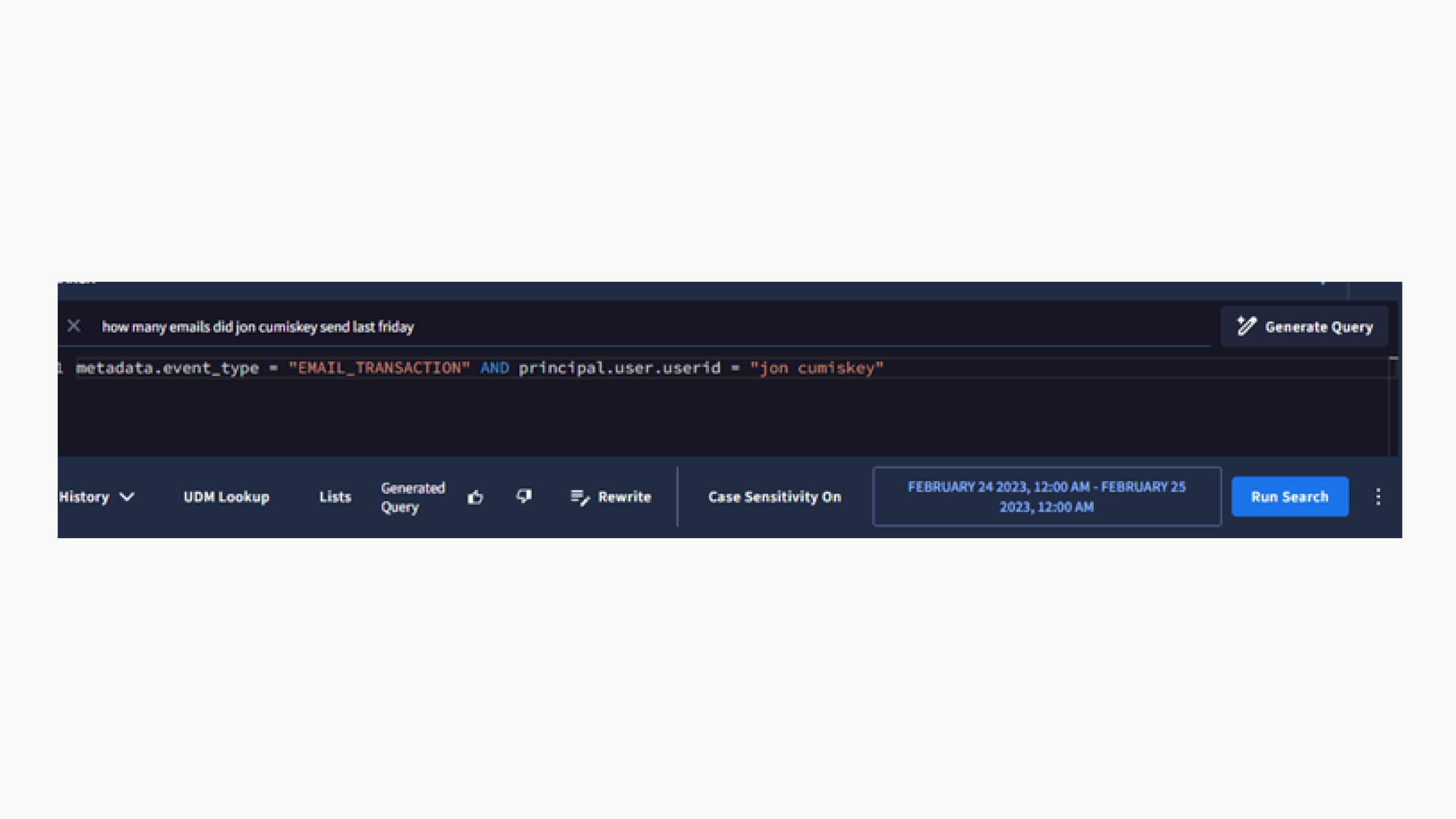

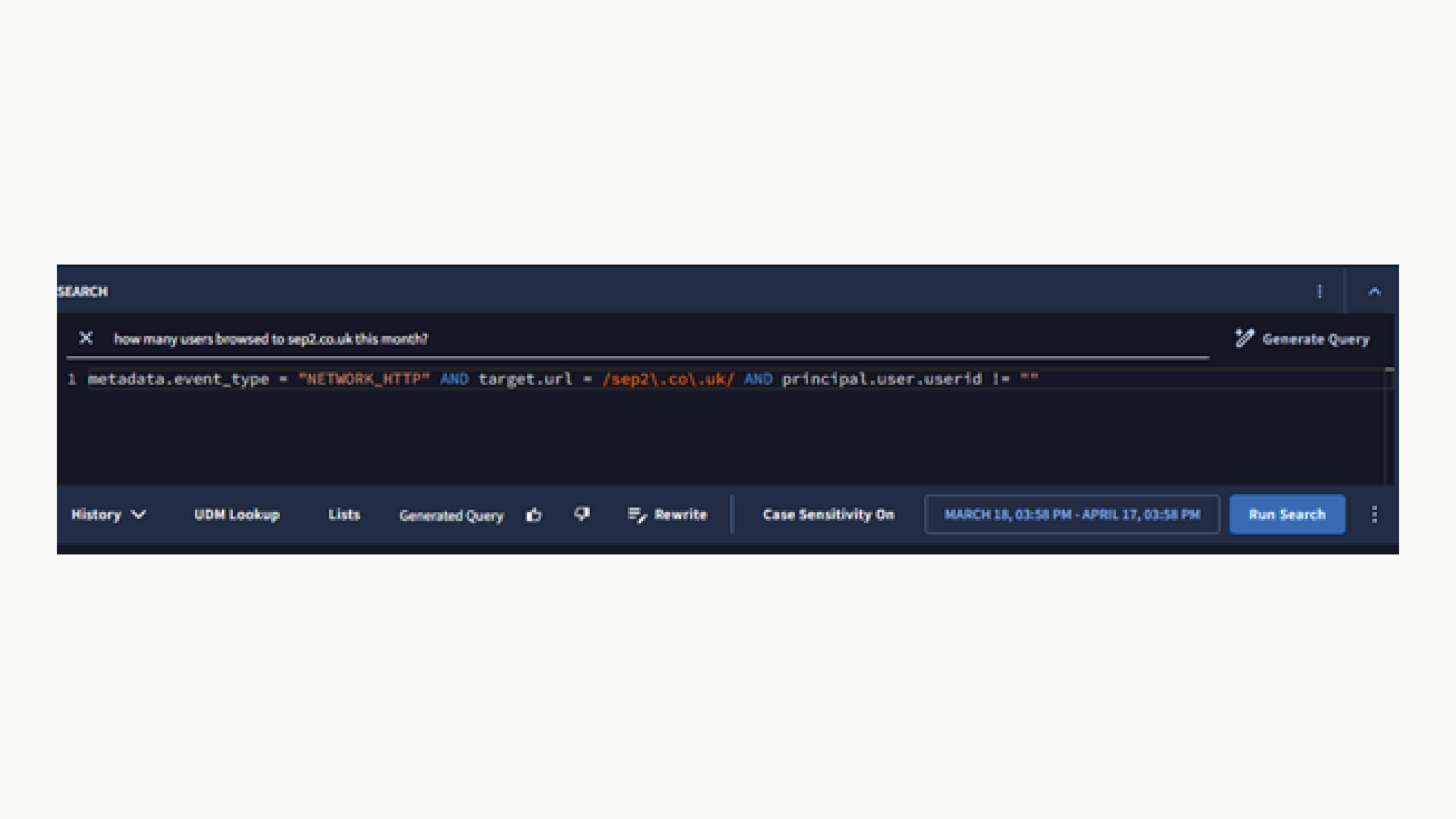

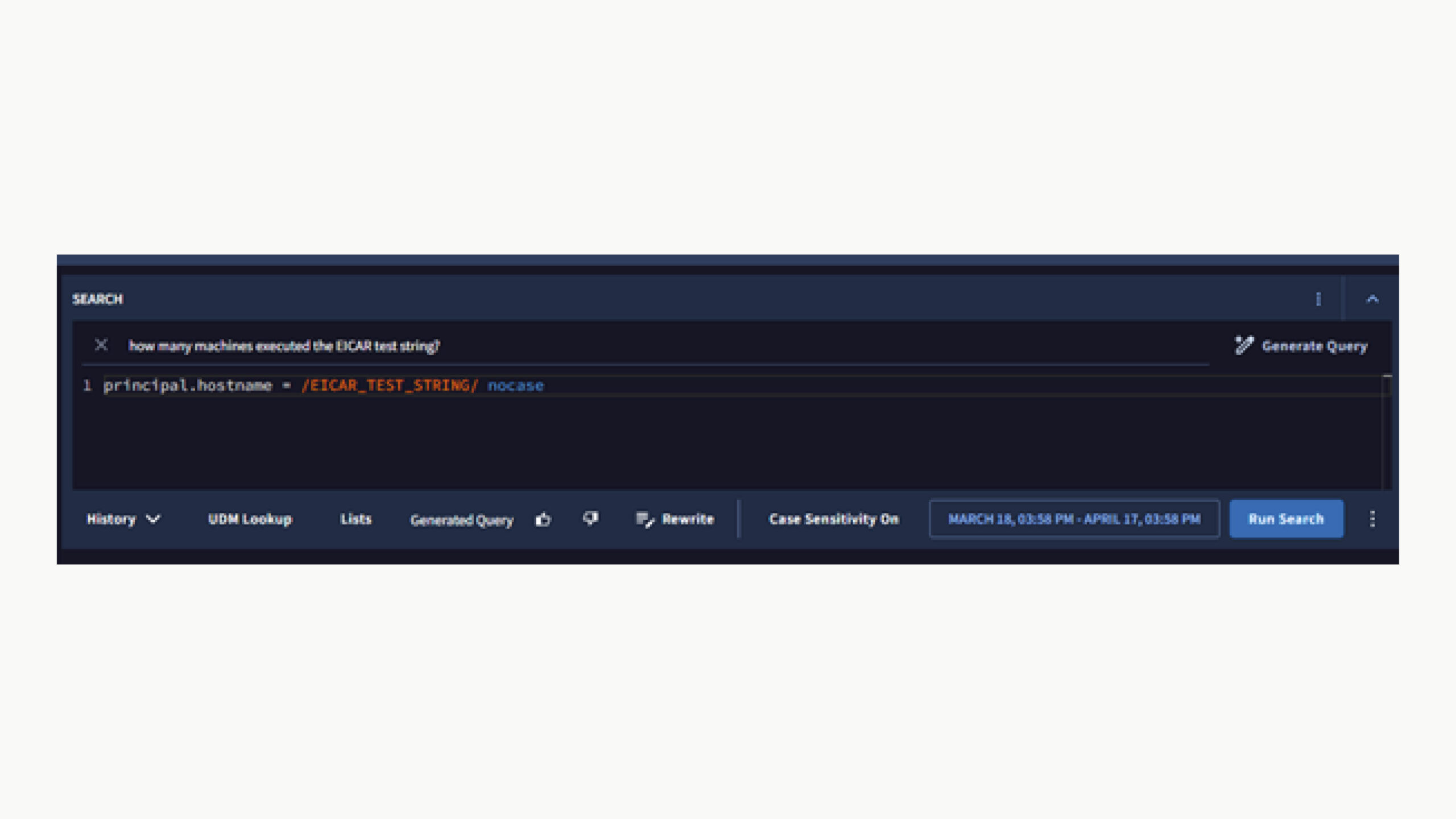

SIEM Search Helper

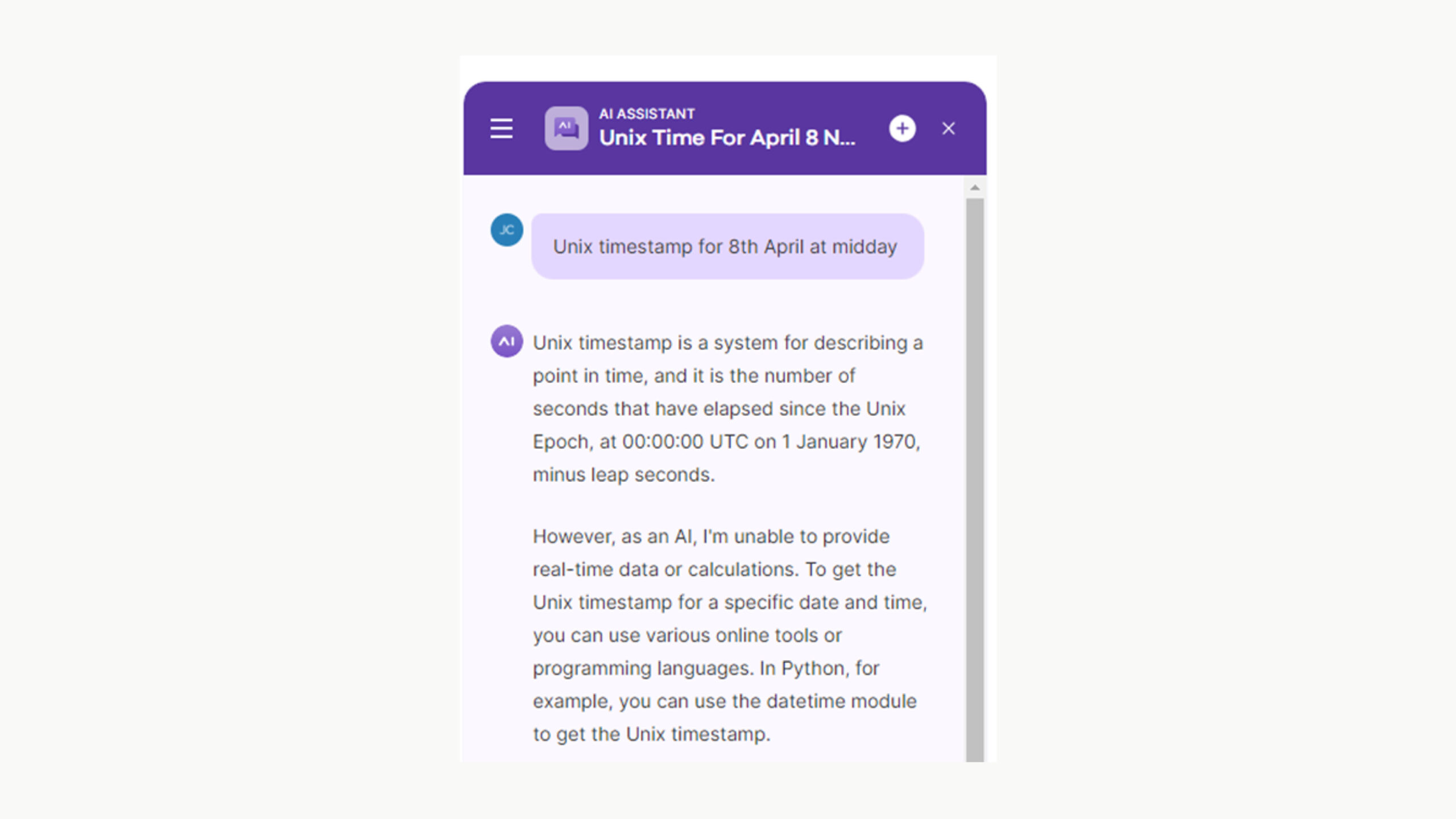

This has a very simple modus operandi, which is to help you write structured UDM (Unified Data Model) queries in Chronicle without having to know the specific syntax of UDM.

So, let’s see a few examples.

Below, I’ve asked Chronicle how many emails I sent last Friday: